This is the sixth article in my series on creating comprehensive automation. Click here to jump to the first article.

The Machine Service Group Role Schema (MSGR)

MSGR Option Overview

Machines

The machine is the abstraction of a host. Virtual, physical, blade, leased, all concepts of a host are attached to the host. This is a host instance.

The machine is the abstraction of a host. Virtual, physical, blade, leased, all concepts of a host are attached to the host. This is a host instance.

I will use the convention of id being the integer primary key for every table, to give a universal way to access specific data. (No searching on names for explicit references)

The name is the general label given to this host. I would recommend it be something generic and based off the location, hardware and other physical configuration, as this is tied to the hardware specification, and not it’s purpose in our system.

Hardware is a reference to a hardware specification, which I have left out of this to simplify. A very basic use case would be to make this a string, and store things like “dell 2850” there, so that the manufacturer and model is known. A more advanced case is to have a database with all the makes, models, amounts of RAM and disks, and other physical configuration information, so that everything that may be needed to provision, configure, and trouble shoot this hardware is known in advance and available to scripts for processing.

The location can similarly be a simple string of the data center and row, such as “sjc” or more descriptively, “sjc equinix 555 5 10 20” for San Jose, Equinix, cage 555, row 5, rack 10, rack unit height 20. Preferably this is pointing to a hierarchical regional location database that pin points the region, state, city, location/address, rage, row, rack, and RU in a way that can be used readily by scripts running against this data.

Services

A service is a unique business service, and should include the version number. I have simplified it here to only be a name, so the string for the name can include the version number as well if versioning of this service is important.

A service is a unique business service, and should include the version number. I have simplified it here to only be a name, so the string for the name can include the version number as well if versioning of this service is important.

The class_name can be used for systems like Puppet to create a hierarchy of includes for a machine. Includes should be ordered as: service, group, then role. This order allows all general include information first, then data set specific values can be populated, and finally all of the packages, directories and other role specific operations can be included.

The wiki_url field allows a link to documentation about this service, and the info field for is internal documentation text about this service.

Groups

This is essentially the same as a service, but should not need any version information, as it is about a particular set of data already.

This is essentially the same as a service, but should not need any version information, as it is about a particular set of data already.

All other schema information is the same as the as the service.

Roles

Role’s fields are similar to groups.

Role’s fields are similar to groups.

Like services roles could use a version field, or include the version of the role in the name.

You can find out more about the relationships between services, groups and roles here.

Machine Service Group Role

This is the reason we do all of this work.

This is the reason we do all of this work.

We now have a mapping from a machine instance, to a service, a data set group, a and a host role.

Additional common information can be stored about this instance of an MSGR, such as the IP address that this service publicly listens to and the port it listens on.

Additionally information can be documented about this in text (info) and remotely on a wiki, to track issues with this machine’s use of this service role.

Finally, information about whether this machine is actively running this service’s role or whether it is in maintenance are stored here, so we can make logical decisions based on monitoring, alerting and error correction.

Options

Option information is separated into it’s own table here for ease of understanding, but it is probably best to build 3 option tables, for services, groups and roles, since then an additional reference table isn’t needed to link the services, groups and roles to their option data. That’s wasteful in use, but can be easier to understand.

Option information is separated into it’s own table here for ease of understanding, but it is probably best to build 3 option tables, for services, groups and roles, since then an additional reference table isn’t needed to link the services, groups and roles to their option data. That’s wasteful in use, but can be easier to understand.

This tracks the name of the option, which is like a dynamic field. It tracks the type_name of this option, which will reference an external type system for validation of this data (which I will get into it my next post), and then tracks the version of this option data and who changed it and when.

Option Relations

This is what the relationship looks like between the services, groups and roles, and their option data. Like I said above, this is better implemented as 3 option tables, instead of this 2 layers, as the abstraction is more work and provides no real benefit.

This is what the relationship looks like between the services, groups and roles, and their option data. Like I said above, this is better implemented as 3 option tables, instead of this 2 layers, as the abstraction is more work and provides no real benefit.

Next Steps

This is where we are going over the next two articles. The first will cover creating a Change Management System (CMS) for this MSGR schema, so that changes can be made without immediately impacting the production or other environments. The second article to-come will be on creating what I’m calling a Floating Schema, which is a loose way to generally specify a schema that can be used by many scripts and services within this system.

This will allow us to create MSGR options that can be validated against a type system, even though the options are specified dynamically. It can also allow us to create a schema for the options, so that there are pre-set options for different services, groups and roles.

This is the fifth article in my series on creating comprehensive automation. Click here to jump to the first article.

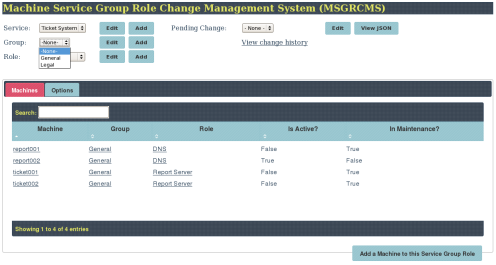

The article will walk through a series of screen shots demonstrating the workings of a simple Machine Service Group Role system with an integrated Change Management System.

This is more of a reference post, so just take a look at each header and the screen shot, and do your best to figure out how that maps to the Service >>> Group >>> Role three layer structure I discussed in the last article.

In the next articles I will talk about how the schema for this system is laid out, and then I will begin building a new system from the ground up to show all the steps involved in building a comprehensive automation system.

Note: Some of the data in these screen shots are a little off, like the report servers say DNS, try to ignore it as it’s example data.

An MSGR CMS application

Service: Ticket System Groups: General or Legal

Service: Ticket System Group: General Roles: Report or Ticket servers

Editing a Machine entry

Something changed: Is Active = True

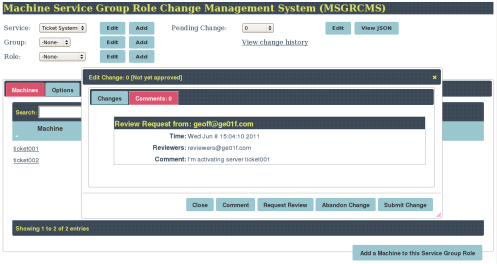

Editing the Change List shows the Pending Change

Requesting a review of this change sends out an email

The Review Request shows in the Change Comment History

The first comment on this change says to abandon it

But the next comment overrules that and says to ship the change

Confirming submission of this change

The change has been made. Is Active = True for ticket001 and there are no more Pending Changes

Services, Groups and Roles all have some basic data, in this case Puppet and Wiki integration

I have added custom data to the Service and Group in this configuration: A path, a name, and a stale joke

Editing Group option data, allows all the host roles that work with this Service Group to have access to this custom data for templating or performing actions

Wrap Up

I hope I made the work flow of these screen shots easy enough to follow, but if I didn’t I’ll try to remedy that by describing how to build a system like this in detail, by building it step by step and writing it up as I go.

This is the fourth article in my series on creating comprehensive automation. Click here to jump to the first article.

The article will discuss the foundation of the core schema behind my comprehensive automation authoritative database.

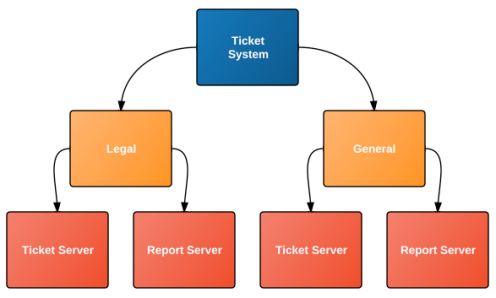

Service >>> Group >>> Role

I use a three layer system to track information about my automation system: Services, Groups and Roles.

I divide up all the information I have about configuration, or that I would like to track, and I apply them to one of these areas, depending on whether they are general (Service), are about the data set (Group), or are about a host that performs work (Role).

When it is time to provision, configure, test, monitor or take actions on a host, it is the Service, Group and Role data that is specified in the authoritative automation database that gives the options and commands for what to do. These may use the data raw, or may have used the data to query, process, or template configuration information for performing actions on a host.

Service

This is an operational level service, higher in the stack than a service like SSH that runs on an operating system. This is the kind of service you would talk about as in “the database is up”. You are not referring to mysqld running, you are referring to the service that mysqld and it’s corresponding data and storage, and the ability to get traffic there are providing.

Group

This is like a group of data. Different collections of data are separated by group. They are data for the same overall Service, but the data needs to be differentiated, and will likely be accessed by different IP addresses and host names.

An example application can be a ticket system in which two sets of data need to be tracked, one for the business’s legal department, and another for the rest of the company. The legal department’s data cannot be stored on the same hosts as the rest of the company’s tickets, because they contain sensitive data and for increased security they are run on a machine in a locked cabinet.

Both ticket systems will run the same software, and provide the same business service, and so they are in the same Service: Ticket System.

The Ticket System service needs to track both the legal department and the general tickets, and so two Groups are needed: Legal and General.

Role

This is the role of a host, for a given Service’s Group’s data.

This specifies what this host has to do for this service, operating on it’s group’s data. A service can have any number of roles, so that they are a microcosm in themselves. All of those roles operate on the same set of data, which is specified by the group.

Roles determine what packages are installed on a host, and what operating system level services it is running. Roles also determine the security requirements for this host, since it may need to accept traffic on ports, or allow users to log in (such as a Bastion role).

Example

In this diagram it shows four roles, which shows that they could be on four separate hosts. However, they could also share hosts, so that both Ticket Servers are on a single host, and both Report Servers are on a single host. It could also be provisioned so that they are all running on a single host.

This hierarchical description makes no preset requirements for how these services are configured, only that they have this structure.

Why?

The reason to use these three layers to track our services, data groups and host roles is that we can store configuration information at each level, and create a repeatable pattern that can be used for an infinite number of different data sets.

Because optional data can be set, these different data set systems can actually operate uniquely to the others, so that there is a uniform way to manage the information about our systems, and it can create them all to work identically on their individual data sets, but we can also have them all work in unique ways on their individual data sets with a single unified configuration.

Read the next article: Walkthrough of a Machine Service Group Role Change Management System

This is the third article in my series on creating comprehensive automation. Click here to jump to the first article.

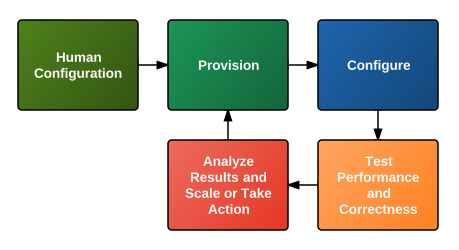

This article is a deeper introductions to one my core concepts for comprehensive automation, the four phases of automation. All efforts in operations falls into one of these areas, and so we are starting with comprehensive coverage of the operational problem set.

The Four Phases

In my attempt to comprehensively automate operations, I have broken down the full life-cycle of the process into the following effort phases:

Provisioning

The creation of new usable hosts, or removal of existing hosts. Automatically scales usable hosts from existing physical hardware, virtual infrastructure or vendor infrastructure. The provisioning process should ideally end with a host that is network capable, but receives no traffic and has no distinct configuration. In some cases it is better to bake some things into the provisioning installation image to not require post-provisioning configuration, but these things then need to have their life-cycles managed from the image, which should change less and is harder to test, instead of during configuration which is expected to change frequently and is easier to test.

Configuration

After a host is usable on the network, this stage creates directories, sets permissions, installs packages and adds users, groups, services and files. After the configuration phase, this should now be a usable host. It should be able to serve requests, have any authorized users access it, and do whatever else it is supposed to do. You know the configuration is done when all the correctness and performance tests pass. Configuration is done after provisioning, but will be done periodically or on system update events, such as if a configuration file needs to be re-templated because a new host is available to accept traffic.

Test Performance and Correctness

This stage is both testing in the traditional sense, and monitoring in the traditional sense. The differences between tests and monitors is only the expectation that monitors will not cause performance degradations, and can be run consistently at a short interval (eg. 5 seconds to 5 minutes). Tests against performance show degradation and could cause a performance Service Level Agreement (SLA) to be violated, which causes provisioning to scale more hosts. Tests against correctness show valid function and data, and violations against SLA will likely cause service or host restarts, or retrieval of data stored elsewhere to cure data corruption, but also will likely cause this host to be de-provisioned and the provisioning of a new host.

Analyze Results and Scale or Take Action

Testing and monitoring creates time series data, which can be analyzed and summarized and compared to SLA tolerances. If SLA tolerances are violated, then corrective actions can be taken, which may restart a service, or de-provision a host, or request the provisioning of new hosts. When all actions have been taken and an SLA violation cannot be cured then humans should be paged to evaluate the state of the system and make configuration changes to either change the SLAs, provide access to new hardware or vendor infrastructure, or make a decision that cannot be made by the automation scripts.

Human Configuration of the Automation Process

Humans should not make changes directly in a comprehensive automation solution, though doing read only tasks is acceptable and desirable for building and keeping familiarity with the working system. If changes are manually made then the state of the system is no longer completely known and potential side effects have just been introduced. The comprehensive automation is now an ad hoc system, and will need all manual changes to be removed and replaced with strictly automated configuration to restore the comprehensive automation.

Changes need to be made to the automation configuration plan in the authoritative database, and then that data will flow through the system to be used automatically by the comprehensive processes.

Read the next article: The Foundation of the Automation Schema

The second step to building a comprehensive automation system, after accepting the pros and cons of a comprehensive approach, is to create an authoritative database. A single authoritative database. One data source.

Why one?

0, 1, Infinity. There is no 2.

Zero One Infinity is a theory that is at home in operational automation.

You can have none of something. You can have one of something. You cannot stop at 2 somethings once you pass 1 of something.

Once you have made more than one of something, there will be more reasons to make more versions of that thing. It is inevitable. If there was a compelling reason not to try to handle both cases in a general system (one of that thing), then there will be additional compelling reasons for more versions of that thing to created.

This should be seen as an Operational Law: 0, 1, Infinity. There is no 2.

Using this as an operating law allows us to quickly apply this rule to a problem, and determine if we should not create a given solution, create a single solution system that can handle all problems in it’s domain, or create a solution that manages an infinite amount of solution systems.

These are the only ways we approach problems to render a comprehensive solution, because to violate this law and create a second system we have knowingly created a solution system that will begin increasing in count, but was not designed to manage the many possibilities this could create. It is better to approach a >1 scenario as if it will need an infinite (potentially all resources available) approach, and not paint ourselves into a corner by planning to fail to handle this growth.

An Authoritative Source

If automation is going to be able to replace humans for a given task, it must be able to perform functions that are an acceptable replacement for a human’s efforts.

What is an acceptable replacement for a human’s efforts? My goal for automation is that the automation system does exactly what I would have done in the same situation, if I knew all the details of the situation, and was making the best decision possible.

When I show up to an event to deal with something I want to know what is going on, and so I start collecting information from graphs, monitoring statistics, host and process performance and log information, network utilization and availability, and every other source of information I have the resources to collect in a reasonable amount of time before having to make a decision about an action to take about the observed event.

When I have gathered this information, I have created an authoritative source in my mind of what the situation is. I have my understanding of the architecture, how it was built, what pieces are in play, how the request traffic flows, and the goals for each request.

Using this authoritative information I can make a determination about an action to take, such as restarting a service, or redirecting traffic to a different location, to resolve whatever problem has occurred. This process I go through is documentable, and many organizations create run books or play books for their NOC, and that means it can also be documented in code.

Documenting automation processes to perform in code has been going on for as long as people have been writing code, but having an authoritative database that can be used for every step in the operational life-cycle is presently exceedingly rare, and the first step to creating a comprehensive automation system.

The Single Source of Data, For Everything

An authoritative database that contains all the information necessary to run the comprehensive automation system fits into our “0, 1, Infinity” rule by being 1. There is 1 authoritative database that contains all the information necessary to run all the automation in the overall system, comprising all the systems.

There are of course an “infinite” number of databases in any overall system. Almost every program has configuration files, or takes arguments which need to be stored somewhere (perhaps in a script, a logically interpreted database). Each of these files or databases is a separate data source. Each may contain uniquely represented data for driving that program which does not exist and is not needed anywhere else in the system.

So which is it? An infinite number of databases or a single database?

A single authoritative database is capable of providing source information to seed other databases, and also being an authoritative source on where other authoritative data lives. As long is there is a single root of authority to reliably query to get authoritative data or a location of authoritative data, it is an authoritative data system.

This can be comparable to the Internet’s DNS system, which has authoritative root servers, which give you information on the location of authoritative domain servers. The root servers are the ultimate initial authority, but they do not have all the information.

DNS follows the 0, 1, Infinity rule. There is 1 DNS system for the Internet, and therefore there is 1 Internet. There are many (infinite) networks, one even calls itself “Internet2“, but only a single Internet. Any efforts to try to create 2 of this something have been roundly rejected.

The Structure of an Automation Database

To understand what needs to be stored in an automation database, it is important to understand the entire system you are trying to comprehensively automate.

I break comprehensive automation of system/network operations down into 4 phases, giving me 100% coverage of the operational life-cycle, and thus I have hooks to hang all my data on. Having started with everything I want, I can start to sub-divide those areas to finer granularity, until I have it down to a database schema that can describe every element required to satisfy my 4 comprehensive phases.

My 4 life-cycle phases of comprehensive system and network automation are:

- Provisioning

- Configuration

- Test functionality and performance

- Analyze results and take actions, generating more results to analyze

This life-cycle repeats from 1 to 4 to 1 again, when new hosts are required for additional scaling or to replace a broken host. After a successful configuration, such as passing all the functional and performance tests, a host will oscillate between life-cycles 3 and 4, monitoring and analysis, until a functional or performance Service Level Agreement violation occurs. Life-cycle 2, Configuration, is also re-run periodically, or on a system update event, such as adding or removing a host from a configuration file.

These are only 4 steps, but by using set theory to divide my problem, I can provide 100% coverage of efforts. Having provided 100% coverage of efforts, I can attach data to these efforts.

What does it take to complete an effort? Provisioning, configuration, testing, analysis and taking action are each related to each other, and share data. In fact, the data they do not share is significantly smaller than the data they do share, as they are all operating on the same elements.

Where do humans fit in?

Humans fit in in a few places:

- Humans occupy the previously unlisted #0 spot on the now incorrectly named 4 Phase Life-Cycle: configuration of: goals (SLAs), services provided (operational services), resources to use (physical hardware and vendors), and procedures to follow to collect information and make decisions about taking actions, and how to take those actions.

- Humans are called upon during phase 4 when a situation that the automation system cannot cope with is encountered. These conditions are mainly restricted to multiple failures at attempting to cure SLA violations, as all monitoring is based on goals to be solved, again using set theory to divide the problem space so that it gets 100% coverage initially at a coarse grain, but becomes finer with additional information collection and layered decision making.

- During SLA violations humans would be required to adjust the SLA levels, or add additional physical hardware or vendor options to cure any current SLA violations.

- Humans might also write additional scripts, or specify additional packages to be included. Since environments are comprehensively provisioned and configured by the comprehensive automation system, staging and QA environments can be created that will accurately test the changes being introduced to the environment.

- Humans also need to verify that their intentions are being met by the changes, and that the changes were effective. This is largely a subjective process, and unsuitable for automation.

Four Phase Life-Cycle Schema Elements

Provisioning, configuration, testing, analysis and taking action in an operation automation system requires the follow elements:

- Hardware inventory (for physical installations)

- Platform specifications (OS installation information, such as Linux)

- Package specifications (installation information for services that run on host operating systems, such as Apache)

- Provisioning method (Kickstarting, VM provisioning, vendor instance provisioning)

- A host/machine concept for working with a provisioned host

- Storage inventory (for physical installations)

- Storage specifications (for how to use physical, virtual or vendor storage)

- A concept of an operational service (not a service that runs on an OS, but some level of service above that, such as “Web Page Requests”, instead of Apache)

- Service interface configuration (how operational services interact with other operational services)

- Security and access information

- Script and data locations for running jobs and configuration

- Physical locations (for where physical hardware or vendors are located)

- External locations collected information (time series: RRD, databases, etc) and meta-data about that collection (schedule to collect, last collected, historical success)

- Process specifications (how to do everything, comprehensively and with as much detail as has been added, assuming an infinite number of possible specifications)

Each of these areas requires quite a bit of expansion, which I will begin in my next article which will go into more detail about the Four Phase Life-Cycle, and start creating the schema outline. To do this comprehensively, it must first be described with 100% coverage of the structure, and then additional data can be filled in without effecting the whole.

One system to deal with infinite possibilities.

This is the first post in a new series intended to outline my process of comprehensively automating operational environments.

Why Comprehensive Automation?

Before getting into how to create comprehensive automation, you should first decide if this is something you want to do.

Pros of Comprehensive Automation

- Creates a path to automating all aspects of operations: you can get there from here.

- Allows the possibility of synchronized development, QA, staging and production environments.

- All basic operations are automated, and details and updates are added over time, creating a more and more accurate automation plan that covers more tasks that humans would otherwise have to perform.

- Automation between systems can be verified to be in-sync.

- All data can eventually be brought into the automation system, and used as metrics and for testing correctness.

- Improve the quality of work humans have to do (more planning, less firefighting), and reduce the maximum number of humans required to maintain a complex production system.

- More services and hosts can be managed, as their complexity and scale is mitigated by the comprehensive automation.

- Comprehensive automation systems can create more detail in their automation decision making, and can take a wider variety of actions with confidence that the best information was used at the time, due to having an authoritative database to test current conditions against, to make an informed automated decision and take action without human intervention. Non-comprehensive automation systems lack an authoritative database to have enough information in any given situation to reliably take detailed actions, and so their maximum complexity has limitations and obstacles to implementation. These are facilitated in a comprehensive automation environment.

- Reduces doubt of unintended side-effects when making changes to production systems: operational changes can be tested in accurately modeled development, staging and QA environments.

- New complete environments can be created or destroyed as needed, to test changes to operation before deploying to production.

- Environments can scale elastically based on Service Level Agreement style specifications.

Cons of Comprehensive Automation

- Requires system thinking: understanding the environment as a complete system with all the parts connecting together as they do.

- Requires trusting your ability to test your automation, and update configurations. Full life-cycle management is always a concern, and no tasks are truly one-offs.

- Any changes made outside the comprehensive automation subvert the automation, and can quickly destroy all benefits gained unless rolled back in to the automation system quickly.

- Comprehensive automation is new, and vastly different from manual and non-comprehensive automation. Reliable manual operations is hard, and writing solid automation is hard. Comprehensive automation is harder and requires a more thorough understanding of all areas of operations to understand.

- Comprehensive automation is by definition all-or-nothing. You cannot partially implement a comprehensive automation system, but you can implement a thin skeleton comprehensively and fill in details over time.

If you want to completely automate your environment, you must first start from the position of wanting to completely automate your environment. I do not know of any completely automated environments that currently exist in large or small, and I believe this will stay the case for some time until this discussion picks up steam and whole teams and departments can commit to comprehensively automating their environments.

Without complete acceptance of the rules associated with comprehensive automation it is better to accumulate automation, because the rules will eventually be breached but the assumptions that the system still has comprehensive integrity will remain, causing potential failures without cure or ability to alert when they were supposed to be comprehensively covered.

♦ Becoming fully comprehensive can be done in a legacy environment, but must be done in such a way that creates comprehensive coverage. I will cover how to do this starting with the next article.

Why Comprehensive Automation is All or Nothing

Why can’t you build your way up to automating comprehensively?

It’s a valid question. Many tasks are approached by taking on the first things that need to be accomplished first, and then adding more things over time when they are required and resources allow it.

I think this can be easiest understood by looking at the stages required for comprehensively automating a system, or trying to accumulate automation until it becomes complete.

Accumulation Method of Automation

- Determine problem to automate.

- Determine operational location to install automation.

- Gather information required to perform automation in designated operational location.

- Perform automation of specified problem at specified operational location.

- Repeat as needed, covering all required operations tasks.

Comprehensive Method of Automation

- Determine full life-cycle of problem space.

- Determine all operational locations required to manage full life-cycle of problems.

- Gather information about full problem space and operational goals to a single authoritative data source of some kind.

- Perform operation at each location required on each problem set required, using the single authoritative data source. Synchronization is required between tasks so distributed lock and message queues systems are recommended.

- Once running, additional problems and deeper levels of details are added to the system’s automation structure, but the structure is rarely changed as it initially included the full life-cycle of the problem being solved.

Accumulation vs Comprehensive

The accumulation method provides aggregated changes over time. You could imagine an empty warehouse being filled with a new piece of equipment each time automation is added, and then integration must occur between this new machinery and any existing machinery. Sometimes machinery may replace older machinery, or duplicate efforts, and coordination will need to occur.

The comprehensive method starts with all machinery placed in the warehouse before anything is turned on. At the start of a comprehensively automated system, the full life-cycle is already being automated. Details and additional functionality are added to the existing comprehensive system by either replacing or augmenting existing machinery, but the total flow from machine to machine to automate the warehouse was present before the change, and must be balanced with any new interfaces the new/updated machine introduces, but the environment had 100% basic operational coverage from the beginning, so this adds depth to existing machines, but does not change the nature of the automation.

Accumulative changes often alter the nature of the automation. An example: previously provisioning was done by manually by humans, but now this stage is automated for hosts of type A, but not yet hosts of type B.

Comprehensive automation required all systems to have provisioning automated to not involve humans from the beginning, and so type A hosts would be automatically provisioned. When type B hosts are added to the system, there is no discussion about whether to introduce their provisioning as a different method, the provisioning system is updated to handle type B systems as a different case to type A systems, and both receive automated provisioning using the same general provisioning method, which calls their specific provisioning method.

Where the accumulative system tries to build a house from the ground until it reaches sky scraper height, the comprehensive system builds the shell of a sky scraper, and then adds more elevators and furniture as time goes on.

If you tried to build a regular house, and then modify it into being a sky scraper, it would be obvious fairly early on in the upgrading process that the foundation and structure of the house are not fit to eventually become a sky scraper. The differences in engineering magnitude are easily apparent in this physical example, and yet the differences of approaches in production operation automation systems are harder to see.

The Second Step to Comprehensive Automation: The Authoritative Database

Acceptance of working in a systemic and comprehensive, full-life cycle style of automation is not easy to do. I have found people are very interested in gaining the benefits, but not very interested in changing their work patterns to get access to the benefits, and so I believe that acceptance of the required effort to gain the potential rewards of comprehensive automation will probably be a barrier until what makes a comprehensive automation system tick is more commonly understood.

In the next article on this topic I’m going to cover why an authoritative database is crucial to comprehensive automation, and how you can create one to cover your system and network operations comprehensively.

Read the next article: The Authoritative Automation Database

Framing The Problem with Directly Comparable Terminology

Consistency, Availability and Partition-Tolerance. To begin, which of these things doesn’t belong with the others?

Consistency can be directly measured: Is the data/code consistently the same when accessed from different places at specified times (for a given version of data)?

Availability can be directly measured: Is the data/code available from different locations at specified times?

Partition-Tolerance cannot be directly measured: What would you look at to measure partition-tolerance? It is a contextual process, which means that for a given type of partition, an action would be taken which would try to handle the issues of that partition. The problem is that each step of the process is also subject to partitions (which I will describe in detail below), and so Partition-Tolerance is not of the same order as Consistency or Availability.

The original theorem proposed it this way, and thus framed the discussion, and in my view it is incorrectly framed and this leads to problems.

So to start I’m changing “Partition-Tolerance” to “Partitions”, since tolerance to partitions cannot be directly measured but a partition occurring can be directly measured.

The Order: Success, Success, Failure

CAP obviously sounds a lot better, as it maps to a real word; that probably got it remembered.

However, I’m guessing it has helped to fail to make this concept understood. The problem, is that the “P” comes last.

CAP: Consistency, Availability, Partitions.

Consistency == Good.

Availability == Good.

Partitions == Bad.

So we know we want C and A, but we don’t want P. When we talk about CAP, we want to talk about how we want C and A, and let’s try to get around the P.

Except, this is the entire principle behind the “CAP Theory”, is that Partitions are a real event that can’t be avoided. Have 100 nodes? Some will fail, and you will have partitions between them. Have cables or other media between nodes? Some of those will fail, and nodes will have partitions between them.

Partitions can’t be avoided. Have a single node? It will fail, and you will have a partition between you and the resources it provides.

Perhaps had CAP been called PAC, then Partitions would have been front and center:

Due to Partitions, you must choose to optimize for Consistency or Availability.

The critical thing to understand is that this is not an abstract theory, this is set theory applied to reality. If you have nodes that can become parted (going down, losing connectivity), and this can not be avoided in reality, then you have to choose between whether the remaining nodes operate in a “Maximize for Consistency” or “Maximize for Availability” mode.

If you choose to Maximize for Consistency, you may need to fail to respond, causing non-Availability in the service, because you cannot guarantee Consistency if you respond in a system with partitions, where not all the data is still guaranteed to be accurate. Why can it not be guaranteed to be accurate? Because there is a partition, and it cannot be known what is on the other side of the partition. In this case, not being able to guarantee accuracy of the reported data means it will not be Consistent, and so the appropriate response to queries are to fail, so they do not receive inconsistent data. You have traded availability, as you are now down, for consistency.

If you choose Availability, you will be able to make a quorum of data, or make a best-guess as to the best data, and then return data. Even with a network partition, requests can still be served, with the best possible data. But is it always the most accurate data? No, this cannot be known, because there is a partition in the system, not all versions of the data are known. Concepts of a quorum of nodes exist to try to deal with this, but with the complex ways partitions can occur, these cannot be guaranteed to be accurate. Perhaps they can be “accurate enough”, and that means again, that Consistency has been given up for Availability.

Often, giving up Consistency for Availability is a good choice. For things like message forums, community sites, games, or other systems that deal with non-scare resources, this is a problem that is benefited by releasing the requirement for Consistency, because it’s more important people can use the service, and the data will “catch up” at some point and look pretty-consistent.

If you are dealing with scare resources like money, airplane seat reservations (!), or who will win an election, then Consistency is more important. There are scarce resources being reserved by the request; to be inconsistent in approving requests means the scarce resources will be over-committed and there will be penalties external to the system to deal with.

The reality of working with systems has always had this give and take to it. It is the nature of things to not be all things to all people, they only are what they are, and the CAP theory is just an explanation that you can’t have everything, and since you can’t, here is a clear definition of the choices you have: Consistency or Availability.

You don’t get to choose not to have Partitions, and that is why the P:A/C theory matters.

Today’s system and network monitoring primarily consists of collecting counters and usage percentages and alerting if they are too high or low, but more comprehensive analysis can be performed by using the same troubleshooting logic that would be performed if a human encountered a system condition, such as a resource being out of limits (ex. CPU at 90% utilization), and then encapsulating this logic along with the alert condition specification, so that automation can be created following the same procedures that would ideally be done by the human on duty.

By constantly aggregating data across services into new time series, this can be analyzed and re-processed in the same way the original collected data was, to create more in-depth alerting or re-action conditions, or to create even higher levels of insight into the operations of the system.

The key is to create the process exactly as the best human detective would do it, because the processes will need to map directly to your business and organizational goals, so it is important that the processes are created to map directly to those goals, and this is easiest to keep consistent over many updates if it is modeled after an idealized set of values for solving the business goals.

For example, a web server system could be running for a media website, which makes it’s money on advertising. They have access to their advertising revenue and ad hit rates through their provider, and can track the amount of money they are making (a counter data type), up to the last 5 minutes. They want to keep their servers running fast, but their profit margins are not high, so they need to keep their costs minimal. (I’m avoiding an enterprise scale example in order to keep the background situation concise.)

To create a continuous testing system to meet this organizations needs, a series of monitors can be set up to collect data about all relevant data points, such as using an API to collect from the advertising vendor, or scraping their web page if that wasn’t available. Collecting hourly cost (a counter data type) and count of running machine instances (a gauge data type) can be tracked to provide insight into the current costs, to compare against advertising revenues.

In addition to tracking financial information: system information, such as the number of milliseconds it takes their web servers to deliver a request (a gauge data type), can be stored. They have historical data that says that they get more readers requesting pages and make more money when their servers respond to requests faster, so having the lowest response times on the servers is a primary goal.

However, more servers cost more, and advertising rates fluctuate constantly. At times ads are selling for higher, at times less, and some times only default ads are shown, which pay nothing. During these periods the company can lose money by keeping enough machine instances running to keep their web servers responding faster than 200ms, at all times.

Doing another level of analysis on the time series data for the incoming ad revenue, the costs of the current running instances and the current web server response times, an algorithm can be developed to maximize the best response for maximizing revenues during both periods of high advertising revenues and periods of low advertising revenue. This can change the request times needed to create a new running instance to perhaps only 80% of request tests have to be under 200ms, instead of 100% of tests (out of the last 20 tests, at 5 second intervals), and at lower revenue returns raise the threshold to 400ms responses. This value for tolerances and trends could also be saved in a time series to compare against trended data on user sign-up and unsubscribes.

If the slow responses are causing user sign-ups to decrease in a way that impacts their long term goals, that could be factored into the cost allowed to keep response times low. This could be balanced against the population in targeted regions in the world, where they make the most of their revenues from, so they keep a sliding scaling between 200ms and 400ms depending on the percentage of their target population that is currently using their website, weighted along with the ad revenues.

This same method can work for deeper analysis of operating failures, such as the best machine to be selected as the next database replication master, based on historical data about it being under load and keeping up with it’s updates and it’s network latency to it’s neighbor nodes. Doing this extra analysis could avoid selecting a new master that has connectivity problems to several other nodes, creating less stable solution than if the network problems had been taken into account.

By looking at monitoring, graphing, alerting and automation as a set of continuous tests against the business and operational goals your organization’s automation can change from a passive alerting system into a more active business tool.

As I’ve been developing the Red Eye Monitor (REM) system, I have spent a lot of time thinking about how to think about automation. How to talk about it, how to explain it, how to do it; all of it is hard because there are more pieces to system automation than can be kept in our brain’s symbolic lookup table at one time. I believe most people will top out at 3-4 separate competing symbols and there are somewhere between 10 and 20 major areas of concern in system automation, making it very difficult to keep things in mind enough to come up with a big picture that remains comprehensive as the detail magnification levels go up and down.

I had initially phrased REM as a “Total Systems Automation” tool, because it was meant to be comprehensive, and comprehensive automation is a fairly new idea, even though many people have been working on it for a long time, it just hasn’t caught on yet, really.

Having attempted to explain what a comprehensive automation system consists of, and what benefits it has, I have now settled on a new, shinier and better name: Authoritative System Automation (ASA).

Why Authoritative System Automation?

During my many discussions with people about a comprehensive automation system, I have settled on the single-most unique and distinguishing element between this kind system and other kinds of automation, is that there is only ONE concept of what the system should look like: The authoritative information that defines what the ideal system should be.

All benefits of an ASA system comes from the fact that with only ONE blue print for the ideal system, all actual details (monitored and collected from reality) are compared to the ideal blue print, and then actions are taken to remedy any differences. This could mean deploying new code or configuration data for a now-out-of-date system after a deployment request, restarting a service that is performing below service level agreements (SLA), provisioning a new machine to decommission a machine that fails critical tests, or paging a human to fill the loop on a problem that automation is not capable or prepared to solve.

Authoritative is a super set of comprehensive, because all aspects of the system come from the authoritative data source. Authoritative data sources can only have 1 master. Systems could be split so that there are several systems that run independently, but to be an authoritative system, each of them has to respond only to the requirements as detailed by the authoritative data source that backs the authoritative system automation.

Authoritative System Automation is vastly different from typical automation practiced today, even if that automation is “comprehensive”. Any system where data resides in more than one place cannot be an authoritative system, and will need to be updated in multiple places for maintenance. Upgrades will need to track multiple places, and mismatches can occur where data is out of date in one system compared to another.

All of this reduces confidence in automation, and means that the system cannot truly be automated. It can only be mostly-automated, which is vastly different.

An Authoritative Automated System will never require or allow an independent change, unless the piece being changed has been removed or frozen from automation, the ASA will revert the change or otherwise take corrective actions, and so the independent change is essentially an attack against the ASA. A properly configured and complete ASA will correct the change, and the system will continue.

Another aspect that is a major difference between an ASA and a non-ASA automated system is that an ASA system will have EVERY piece of data stored in the ASA’s authoritative data source. No configuration data will be stored in a text file, if it is configuration data, it is in the ASA data source and then mixes with templates to become a working configuration file or data when deployed to a system.

This is a major change from most automation attempts, as they merge automated scripts, databases with information for the scripts to operate on, and configuration text and other data sources together, to create a fabric of automation.

An ASA cannot have a single piece of configuration data outside of the ASA data source, or it is no longer authoritative. If the non-ASA attempted to reconfigure something, a side effect can crop up between the new “authoritative” data update and some other source of automation or configuration data.

An ASA system must be built 100% from it’s authoritative data, and must be maintained 100% from it’s authoritative data. Any deviation, manual configuration or data embedded anywhere, breaches the goal of an ASA and creates a non-ASA.

I see Authoritative System Automation as the highest form of automation, and the only comprehensive option, as it is completely data based, and logic serves only to push the data out to reality, and to collect from reality to compare against the ideal. The benefits of an ASA system is confidence in the system doing the right thing, and is testable and verifiable because at every stage in the automation, both the intent and expected result are known and are the same.

Glue is the most important part of any organization, and the part which is always unique to every organization. Understanding this, and knowing how to make and apply glue will make the difference between a smooth running organization and an organization that is constantly firefighting and often working against itself.

In more traditional organizations, glue was process. When you wanted to create cohesiveness between your employees and departments, you needed to create a process, train the players in performing the process, and then have a combination of rewards and punishments for not following the processes that glue your organization together.

Human processes will never be removed, because we are humans and will always have needs too subtle and changing, and human, to be automated.

That said, many things in today’s organizations can and should be automated, and this is the glue with which I spend most of my time making and thinking about.

The thing about organization glue is that it is always unique to your organization. You can buy off-the-shelf glue, but you still have to apply it uniquely, and take care of it uniquely, and train people how to use it uniquely, because no other business does exactly what your business does, with the exact people and structure your business has.

So whether you are a Buy-Everything-Microsoft-Makes shop or a Build-Everything-Myself-From-Open-Source shop, you are still configuring everything uniquely, to solve your unique problems.

Herein lies the reasons more expert operations people choose Linux and other *nix environments, because in these environments you are expected to make your own glue. All the components may come readily available, and many of the glues are already pre-mixed, but you are still expected to figure out where to put it, how to configure it, and probably to write your own custom glue code to connect piece X to piece Y, because they don’t quite line up.

In a pre-packaged environment, much of this has been done for you, many processes have already been worked out, and you are expected to implement them to specification. Certificate programs are created to align workers with the commercial packages they support to enforce these “best practices”.

The trouble comes when these pre-made glue stamps fail to meet all your organizations needs, and then you must create custom glue. In an environment that expects or requires custom glue, this has a steep learning curve, but is expected and encouraged. In an environment where everything is supposed to be planned for you to implement, it is very difficult to add your own processes, and agility is lost at the benefit for having the majority of your solution come out of a box.

Working around these unique elements, while still working with the system to not subvert the benefits you received from purchasing it, create an extremely difficult situation made worse by those who are not capable or do not believe in custom solutions.

The real problem is one of expectations. As a unique service provider, your business will do some things uniquely. If you are in an industry where your service is all taken care of by humans, then your internal operations may well be simple enough to use off-the-shelf glue, and it will work well enough.

In the complex and ever changing world of internet software companies, this is not the case, and never has been. And yet, many people still do not understand that their organization requires custom glue, that their processes will not simply connect together, and that by leveraging the abilities of their senior staff to create custom solutions, that mix in with existing open source and purchased solutions, they can find an optimal balance between buying and building, that they never really had a choice between anyway.

You simply can’t buy it all, because no one sells “Your Business In a Box”. It’s up to you to build your business, and if you do it well, it will seem like it fits in a box. If you do it poorly, it will seem like a combination of post-Katrina wasteland and a forest fire.

Either way, it pays to understand that your business has unique goals, and that it will take unique glue to bind your employees and departments together to achieve those goals.